Content Navigation

1. Alibaba release Qwen3 Model

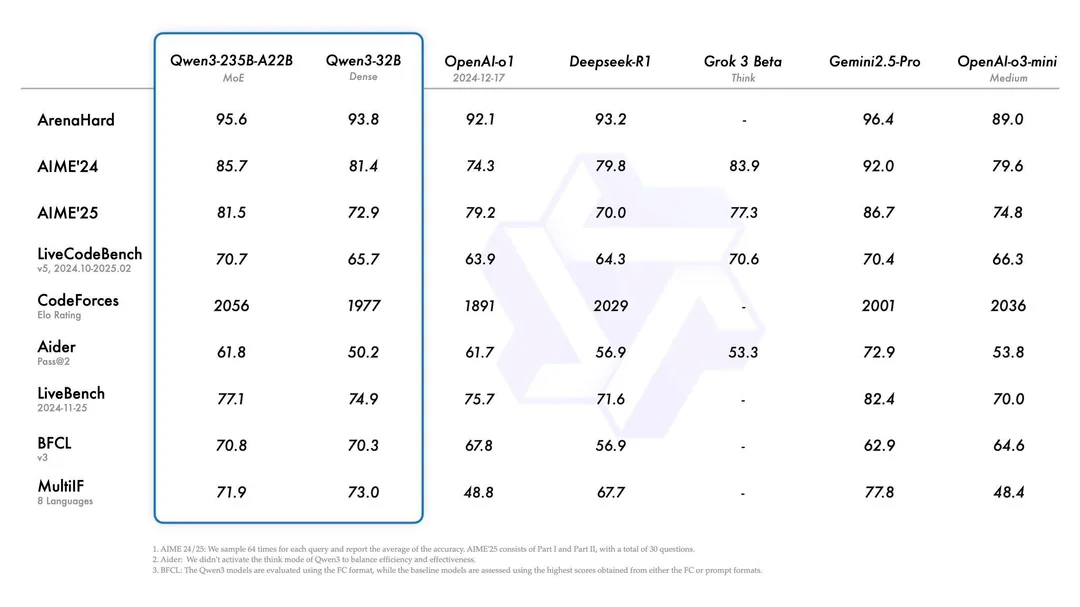

On April 29, 2025, Alibaba unveiled its next-generation AI model, Qwen3, which it boldly positions as the new global leader in open-source large language models (LLMs). According to Alibaba Cloud, the flagship Qwen3-235B-A22B model outperforms top competitors like DeepSeek-R1, OpenAI’s o1/o3-mini, Grok-3, and Google’s Gemini 2.5-Pro across critical benchmarks for coding, mathematics, and general reasoning.

I’m used to every company always claiming they’re the world’s best when launching new products,however I’ll try it out myself and see how it actually performs.

2. What Makes Qwen3 Special?

Two major highlights:

Hybrid Reasoning Model

-

Inspired by Claude 3.7, Qwen3 introduces adaptive reasoning-switching between reasoning and non-reasoning modes dynamically.

Massive MoE Architecture (235B Parameters)

-

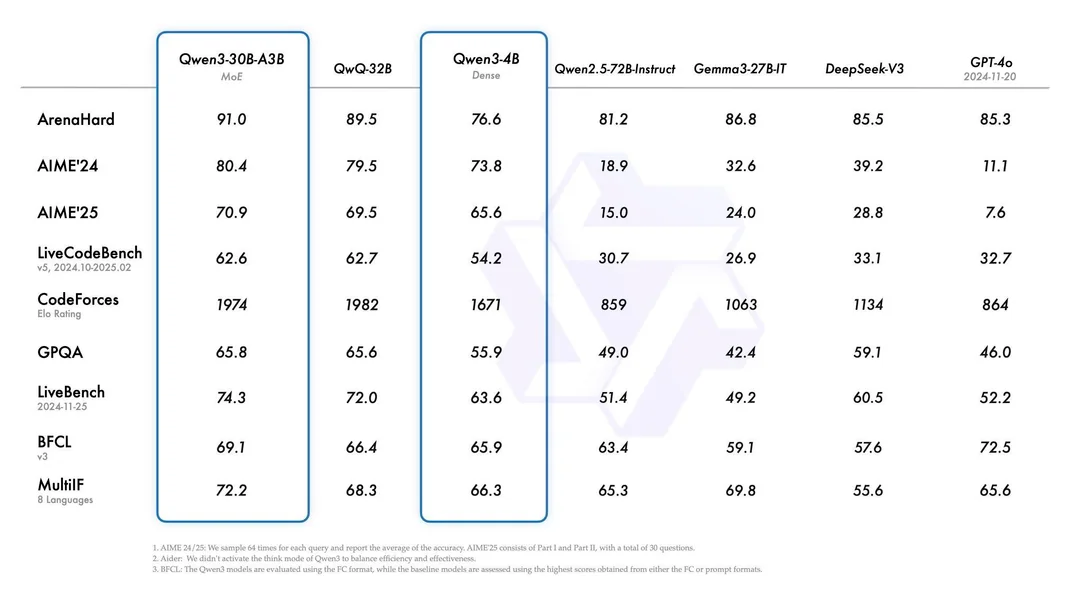

Previous Qwen models maxed at 72B, the bigger the model, the better it works-until you hit the hardware wall.

-

Qwen3-235B-A22B (Mixture of Experts) narrows the gap, marking a significant upgrade.

2.1

3. Community Reactions

I checked the reactions from users on Reddit, and the discussion is quite lively with positive feedback. Some people mentioned that running Qwen3-30B-A3B MoE with a 4090 GPU is very slow under Ollama, while the speed in LM Studio is as expected. If you encounter the same issue, you can give it a try.

4. Download Qwen3 Models

Hugging Face Collection: 🔗 https://huggingface.co/collections/Qwen/qwen3-67dd247413f0e2e4f653967f

4.1

5. Experience

After trying it myself, I found that it is indeed an improvement over Qwen 2.5. However, it is still difficult to evaluate exactly how much progress has been made. I will provide more feedback after further use.