Content Navigation

1.

Just go to the official website and download the client. Ollama supports macOS, Linux, and Windows, and the installation process is very straightforward. After downloading, follow the prompts to complete the installation.

2. Which Large Language Model to Choose?

Currently, the large language model field is like an arms race-maybe you’re the strongest this month, but next month a competitor might overtake you. So it’s a dynamic and ever-changing process. Overall, the following companies’ open-source large models are all quite good:

-

Microsoft: phi4

-

Meta: Llama

-

Alibaba: Qwen

-

Google: Gemma

-

Mistral

-

DeepSeek: Deepseek

3. Which Parameter Size Model to Choose?

This depends on your graphics card configuration, especially the amount of VRAM. With 16GB VRAM, you can generally choose 7B or 14B models. Running 32B models may be challenging. You can test other configurations as needed.

4. How to download a Model?

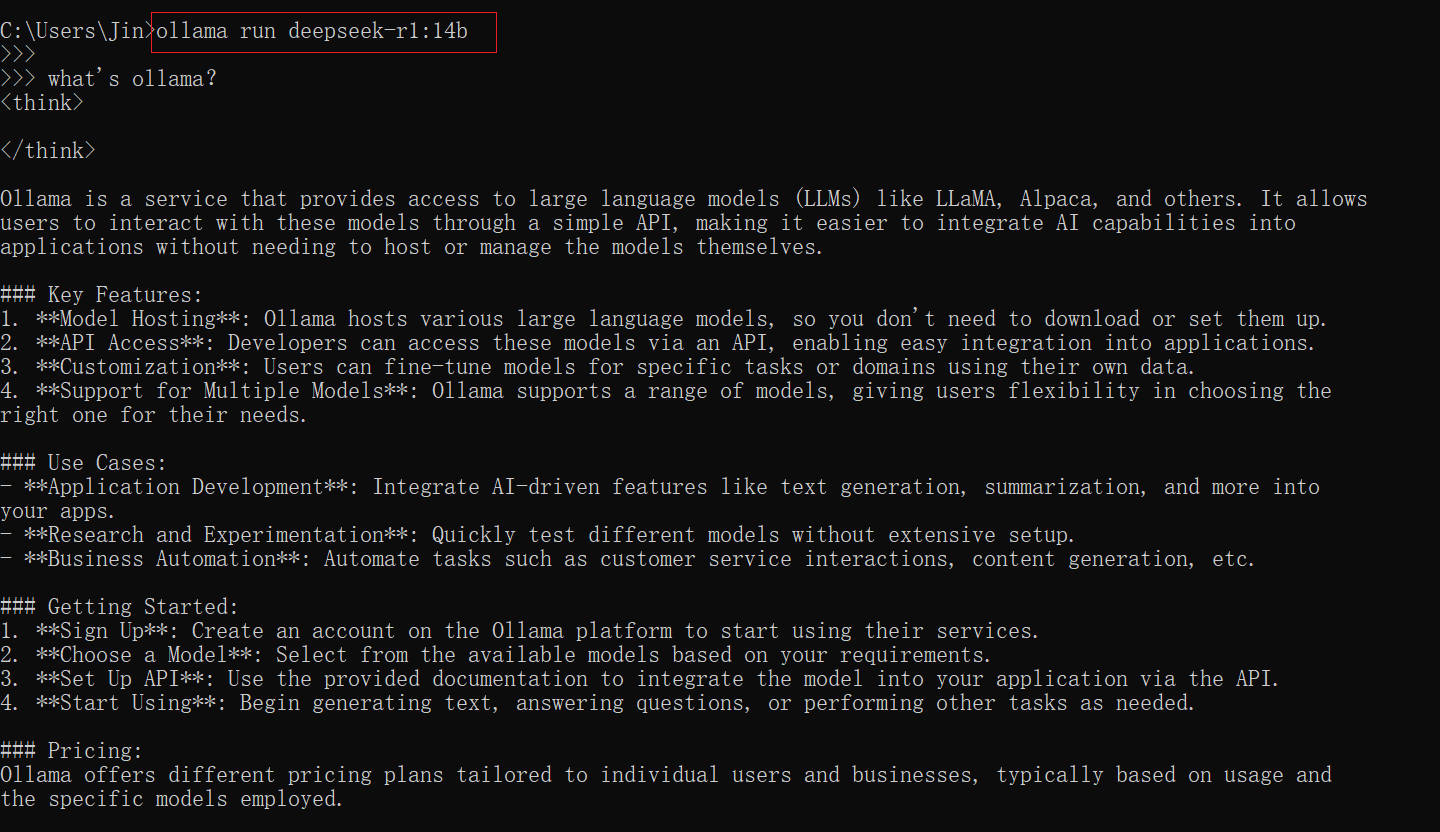

it’s very easy,for example, to install DeepSeek R1 14B, use: ollama run deepseek-r1:14b Just change the text after “run” to the model you want to install.

- run

ollama run deepseek-r1:14b - if deepseek-r1:14b is not exist in Ollama, this command will download it from remote server.

- if exist, Ollama will load and use this model. just use it like ChatGPT web,enter prompt and question, then give you response.

- You can use the

ollama listcommand to check which models you have already installed.

5. Other Choice

If CLI usage is cumbersome, try GUI alternatives like

Recommended Tools for Running Large Language Models Locally – LM Studio