Content Navigation

1. Go Try Dify!

I believe many of you might have come across the name “Dify” on forums or in tech news. You might want to try it but hesitate, worrying that “Dify is high-tech, and I don’t know programming or technology.”

Speaking from experience, I want to make two points.

First, deploying Dify is simple; you don’t need programming skills. Of course, having them is better, but not having them doesn’t hinder usage.

Second, Dify isn’t a silver bullet. It might not meet all your expectations, but it could also be exactly what you need. In short, you can only truly know what it’s like by trying it yourself.

2. Installing Docker

2.1 Installing Docker on Windows

Open the official Docker website:

In your command prompt (cmd), check the Docker version to verify if Docker has been installed successfully:

docker --version

docker-compose --version

2.2 Installing Docker on Linux

Linux users (including macOS, CentOS, Debian, etc.) can directly use the following two commands to install:

yum install -y docker

yum install -y docker-compose

(Note: yum is specific to RPM-based distributions like CentOS. For Debian/Ubuntu, it would be apt-get. For macOS, Docker Desktop is typically used. It’s good to be aware of this, but I’ll stick to the original commands provided for a direct translation of that part.)

3. Installing Dify

3.1 Get Dify Source Code with Git

Official GitHub:

git clone https://github.com/langgenius/Dify.git

It’s not highly recommended to download the ZIP file directly, as this can make upgrading Dify a bit troublesome later on.

3.2 Installing Dify using Docker

-

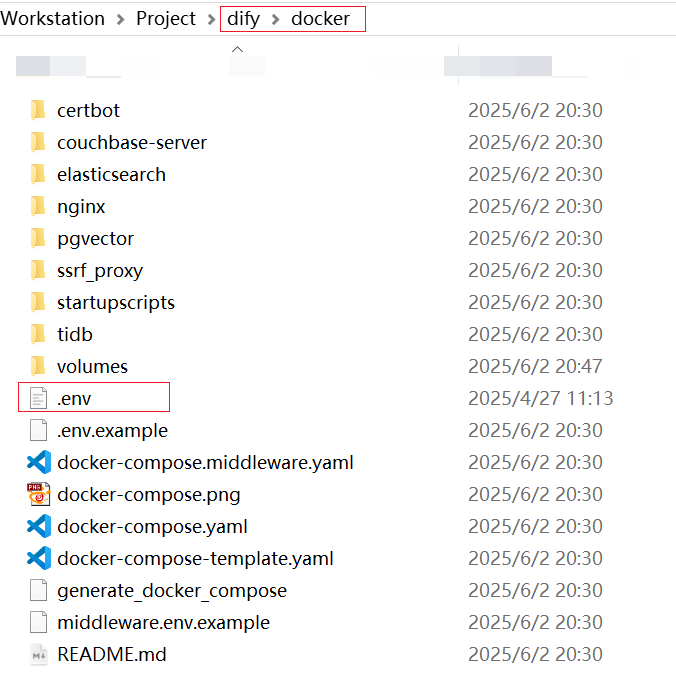

Find the

.env.examplefile in thedockerfolder, make a copy, and rename it to.env. -

Navigate to the directory containing the

docker-compose.yamlfile in your terminal and run the command:docker compose up -d. Docker will then automatically install Dify according to the configurations indocker-compose.yaml. In most cases, you don’t need to worry about the parameters and details ofdocker-compose.yaml; just wait for it to complete the operation. -

-

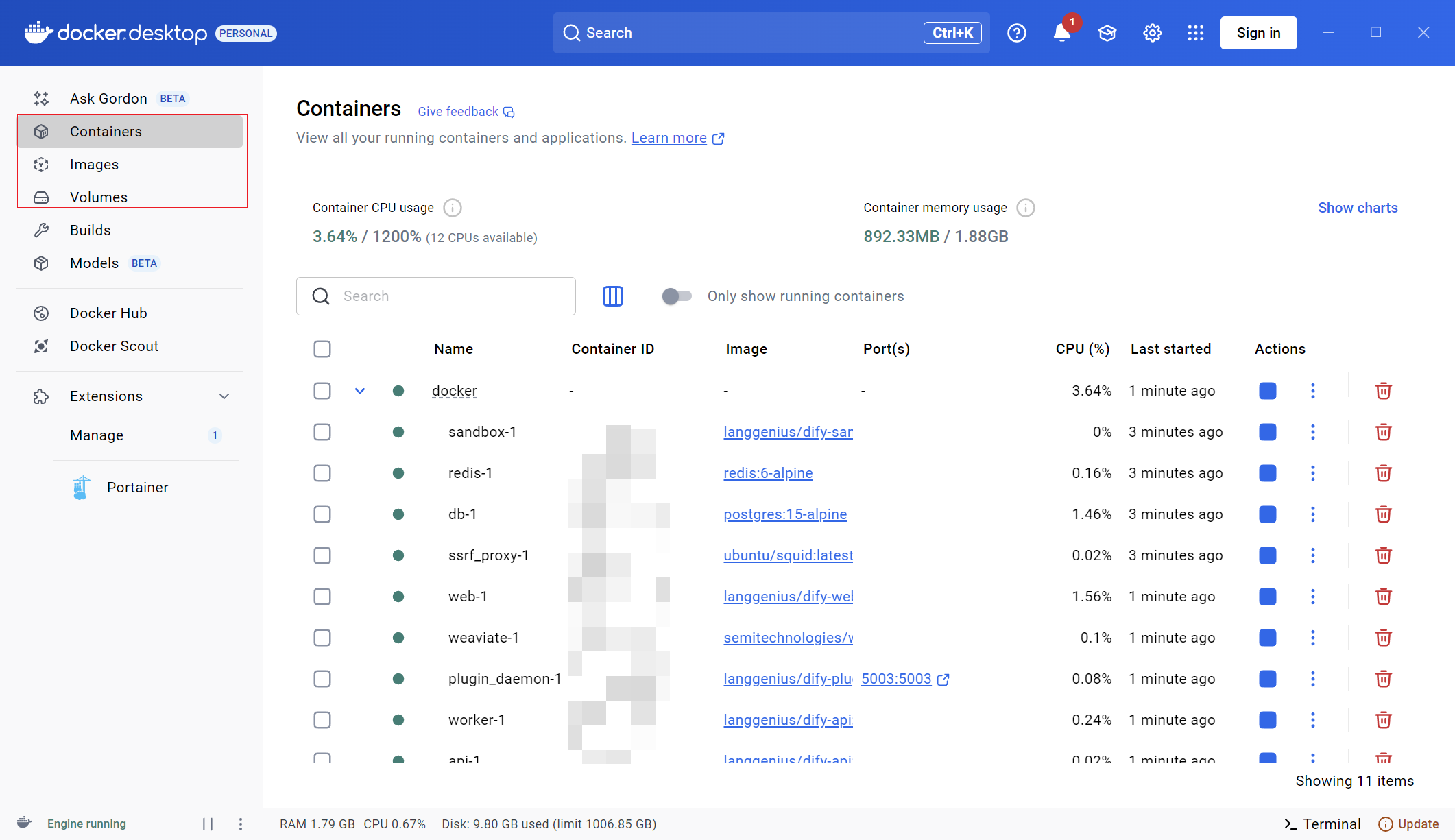

After successful installation, go back to the Docker Desktop interface. You’ll see some newly installed images and containers – this is Dify, and it’s running.

-

3.3 Accessing the Dify Page

Access the Dify web interface by visiting .

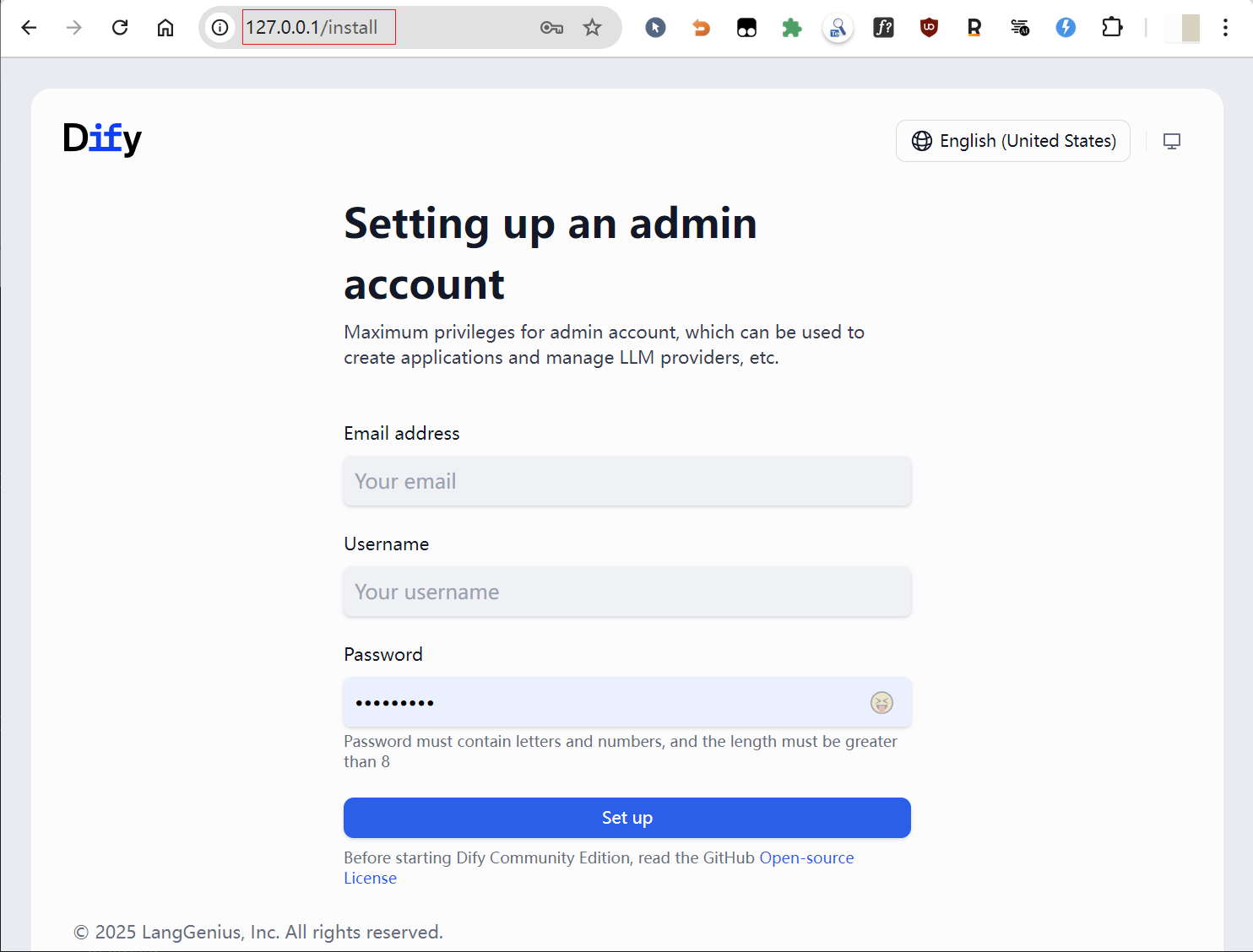

3.4 Setting Up Your Account and Password

3.5 Logging In

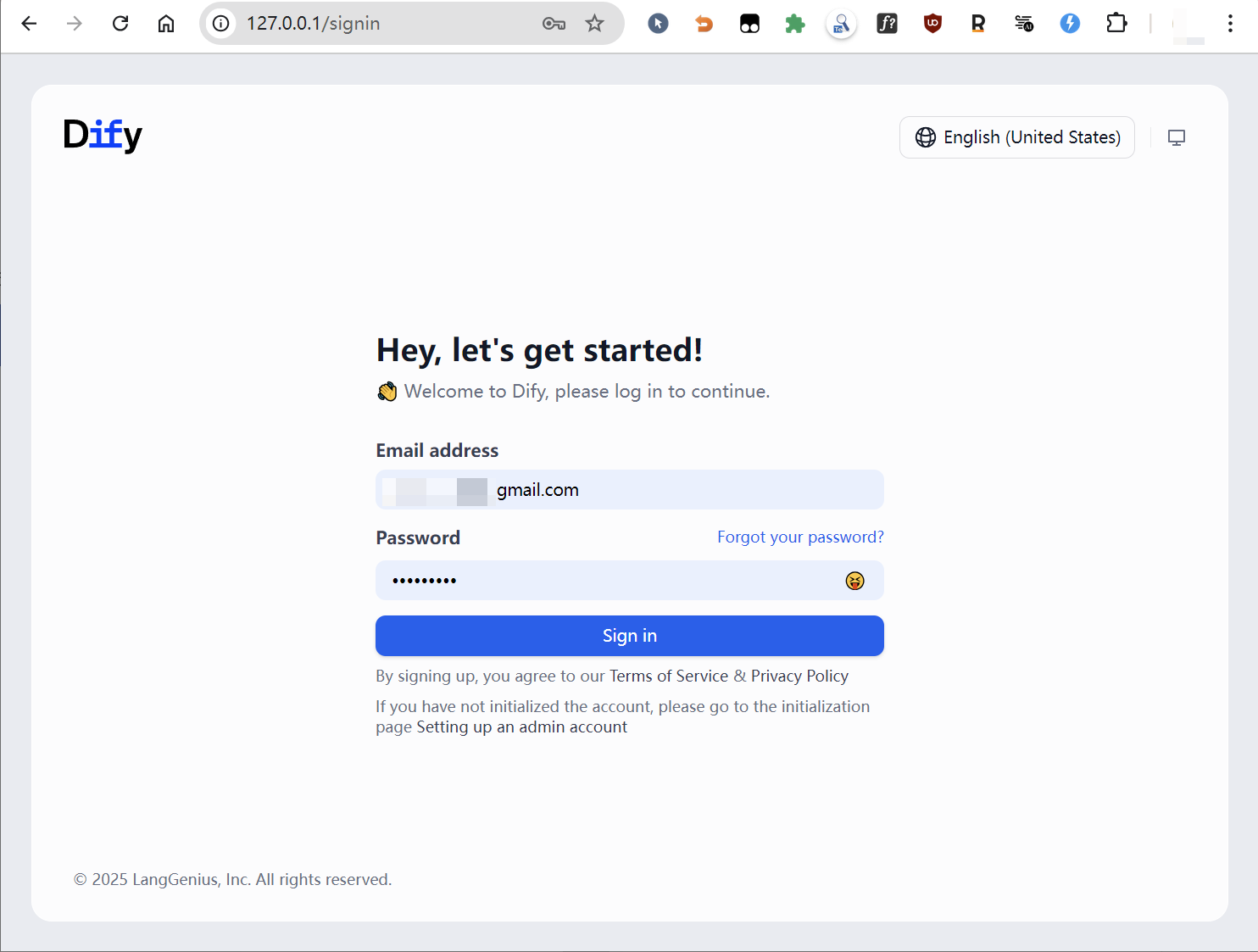

3.6 Reaching the Workspace

4. Configuring Local Large Models (LLMs)

Why configure local large models? I know that major companies now offer free web-based LLM applications like Gemini and ChatGPT, but these are not convenient for integration into Dify’s workflow.

Furthermore, buying API access from OpenAI isn’t economically viable for everyone. Therefore, deploying local large models is an economical and practical solution. Open-source LLMs like Mistral, Deepseek, Qwen, Gemma, and Phi are all excellent choices.

4.1 Installing Ollama

-

Visit the official website and download the installer directly.

-

Install a large model, for example:

ollama run deepseek-coder:14b(I’ve used a common Ollama model as an example, please adjust ifdeepseek-r1:14bis specifically what you use and is available via Ollama pull). -

Use following command to see which models you have installed.

ollama list

4.2 Configuring Local LLMs in Dify

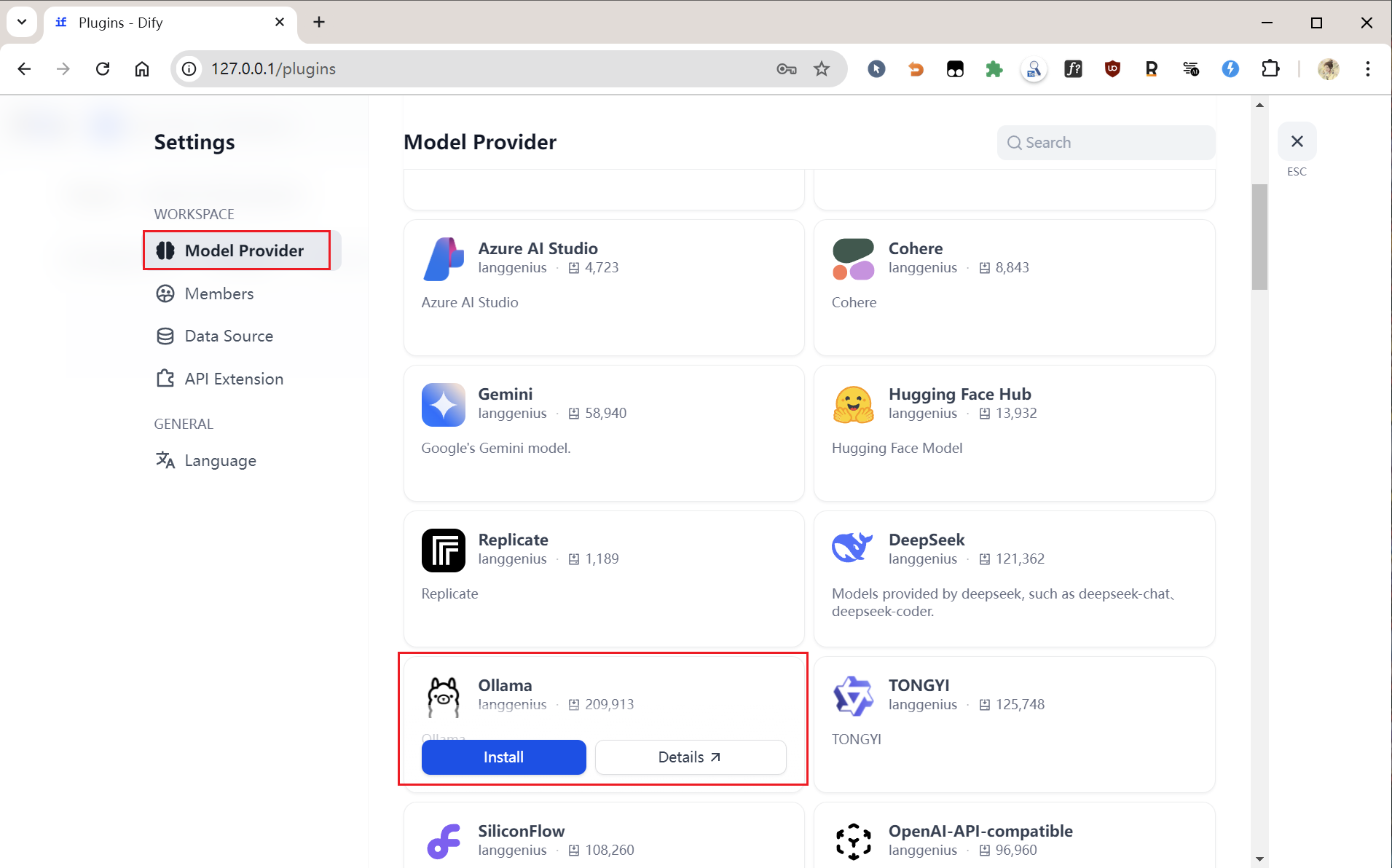

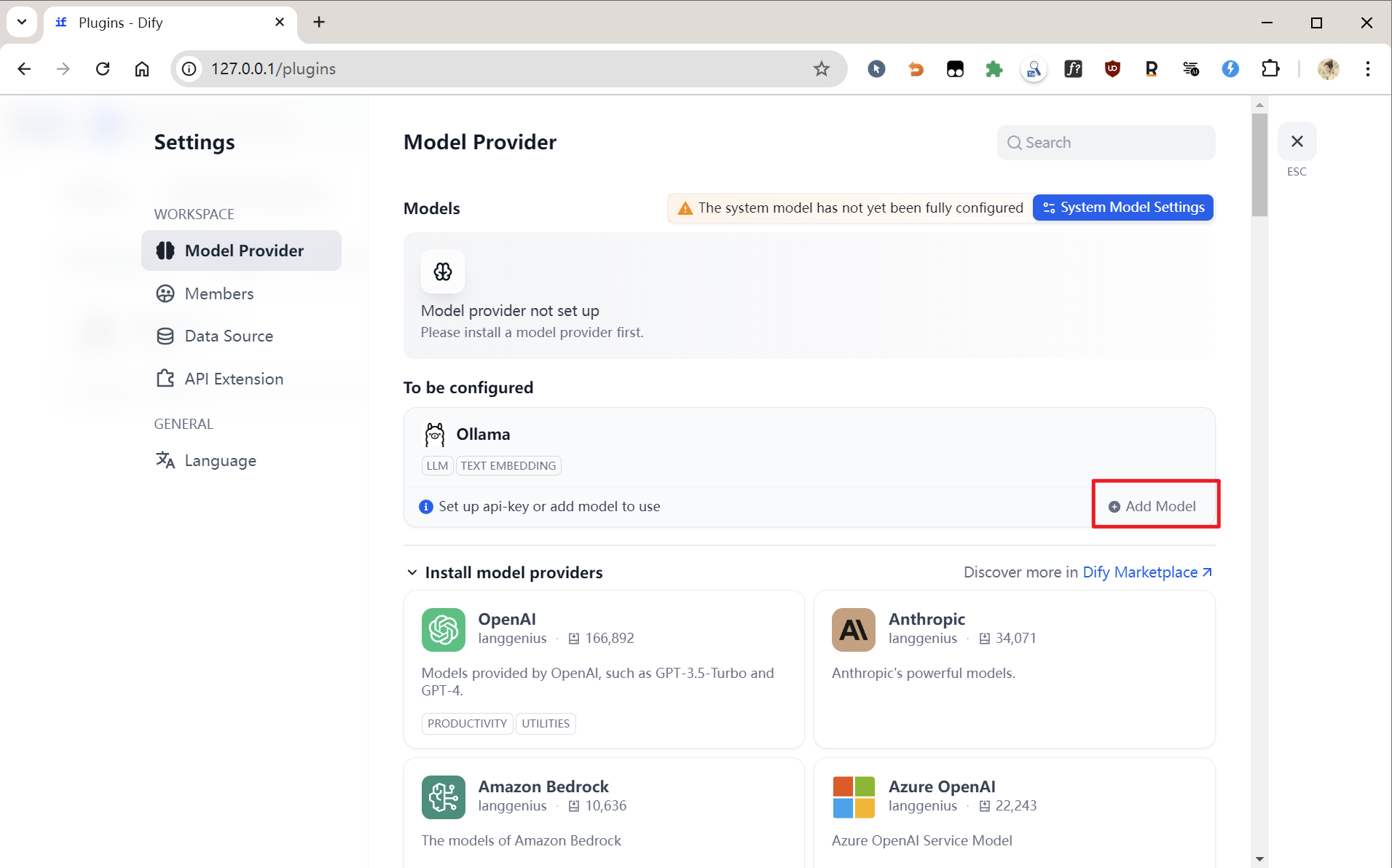

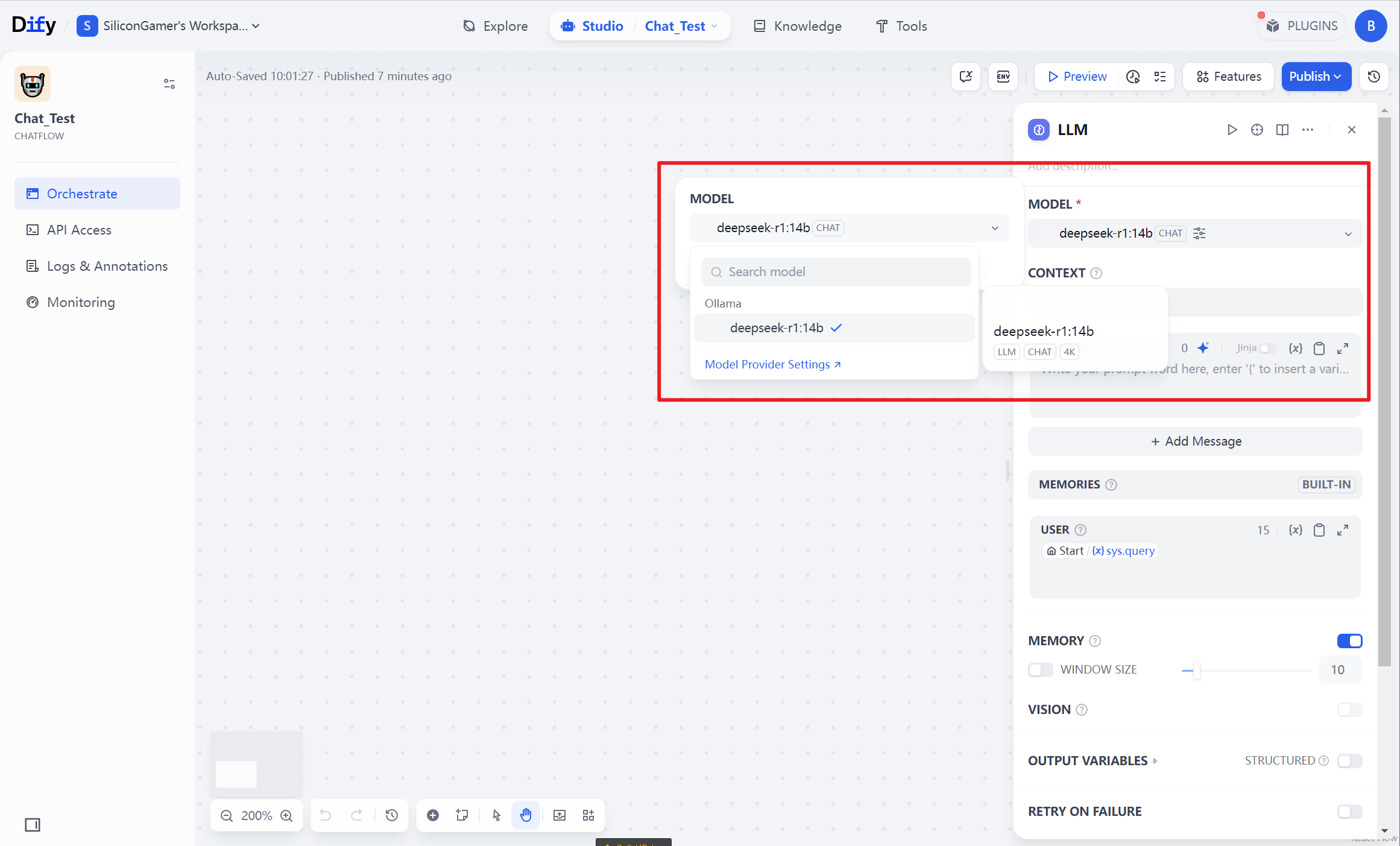

Go to the “Settings” page, click on “Model Providers,” find the large model you wish to use (in my case, Ollama), and click “Add model.”

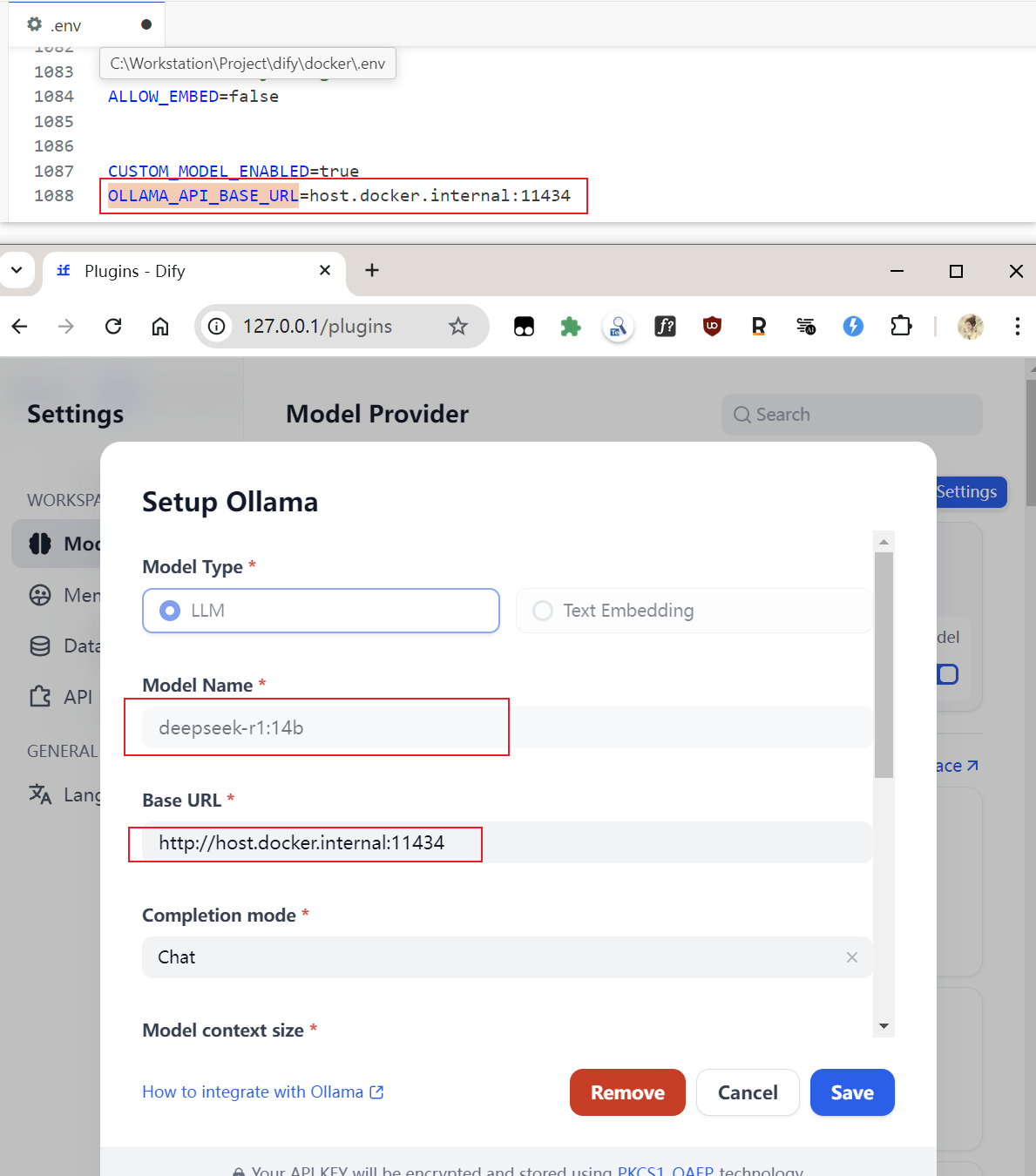

If Dify is deployed via Docker (as described), enter http://host.docker.internal:11434/ in the Base URL field. For other Dify deployment methods, you can refer to the help documentation:

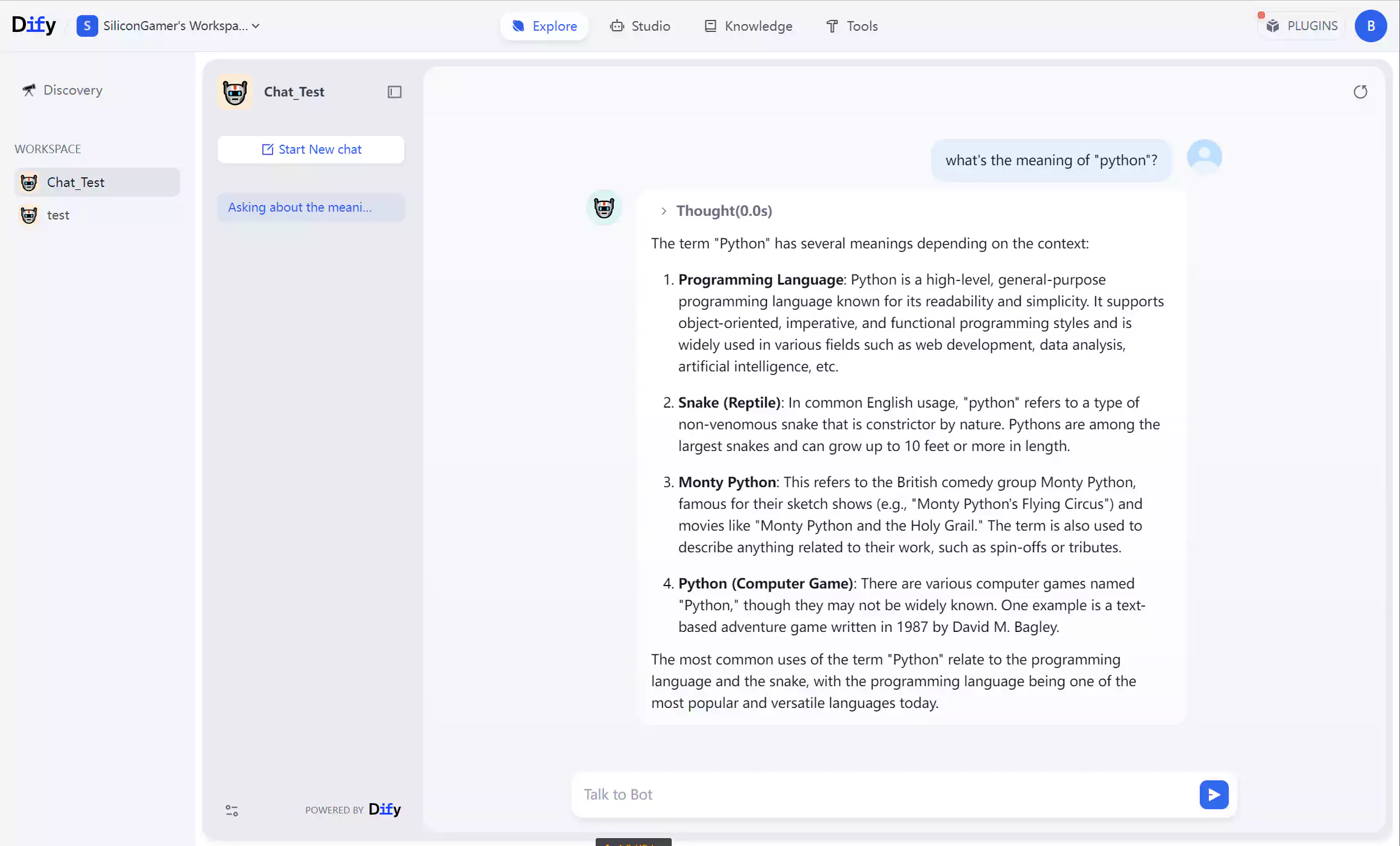

4.3 Creating a Chat Agent

4.4 Asking Questions in the Chat Agent

As you can see, Dify is calling the local large model.

5. What Can Dify Do? (Dify’s Capabilities)

5.1 Visual Development and Orchestration

-

No-Code Prompt Engineering

-

Design AI workflows (like conversation logic, data processing) using a drag-and-drop interface, with real-time Prompt debugging and preview.

-

Built-in prompt optimization tools, supporting Few-shot Learning to enhance model output quality.

-

-

Flexible Multi-Model Switching

-

Integrates mainstream commercial models (e.g., GPT-4, Claude 3.5) and open-source models (Llama 3, Mistral), with support for quickly adding new models within 48 hours.

-

Allows private deployment, connecting to models in local or dedicated cloud environments.

-

5.2 Agents and Automation

-

Complex Task Handling

-

Supports task decomposition, reasoning, and tool invocation (ReAct framework) for tasks like automatic report generation, logo design, or travel planning.

-

-

Extensible Tool Ecosystem

-

Over 50 built-in tools (e.g., Google Search, DALL·E image generation, Wolfram Alpha computation), with support for custom API tools (compatible with OpenAPI/Swagger specifications).

-

-

Multimodal Capabilities

-

Supports text, image, and code generation, combining multiple tools (e.g., using ChatGPT for copy + Stable Diffusion for images).

-

5.3 Knowledge Base and Retrieval Augmented Generation (RAG)

-

Multi-Source Data Integration

-

Supports uploading documents like PDF/PPT/DOC, automatically chunking and cleaning unstructured data, and creating vector indexes.

-

-

Intelligent Retrieval Optimization

-

Offers hybrid search (vector + full-text), reranking models to precisely locate knowledge snippets and display citation sources.

-

-

External Data Synchronization

-

Supports syncing Notion documents and web content to the knowledge base for dynamic updates.

-

5.4 Workflow Engine

-

Complex Process Orchestration

-

Build multi-step task chains using a node-based design (e.g., LLM calls, conditional branches, HTTP requests). Example: User question → Knowledge base retrieval → Answer generation → Automatic translation → Email sending.

-

-

Two Workflow Modes

-

Chatflow: For multi-turn conversational scenarios (e.g., customer service), with memory function.

-

Workflow: For batch processing tasks (e.g., data analysis, email automation), generating results unidirectionally.

-

5.5 Enterprise-Grade Deployment and Operations

-

Flexible Deployment Options

-

Supports SaaS cloud service and private deployment (Docker/Kubernetes), suitable for sensitive industries like finance and healthcare.

-

-

Team Collaboration and Security

-

Provides fine-grained permission management (e.g., “debug prompts only”), data isolation, and audit logs.

-

-

Performance Monitoring and Optimization

-

Real-time tracking of response speed and call frequency, with support for annotating incorrect answers to iterate on the model.

-

5.6 Broad Application Scenarios

Dify is suitable for rapidly building AI-native applications in various fields:

-

Intelligent Customer Service: 24/7 Q&A based on a knowledge base, improving satisfaction.

-

Content Creation: Automatic generation of marketing copy, weekly reports, and short video scripts.

-

Data Analysis: Query databases using natural language (e.g., “Which product had the highest sales last month?”).

-

Education/Healthcare: Personalized learning assistants, intelligent diagnostic systems.

5.7 Developer-Friendly Features

-

API-First Design: All functions are available via RESTful APIs for easy secondary integration.

-

Out-of-the-Box WebApp: Built-in templates support rapid publishing, reducing front-end development costs.

-

Cost Optimization: On-demand model invocation, free tier supports GPT-3.5, enterprise version allows hybrid compute purchasing.

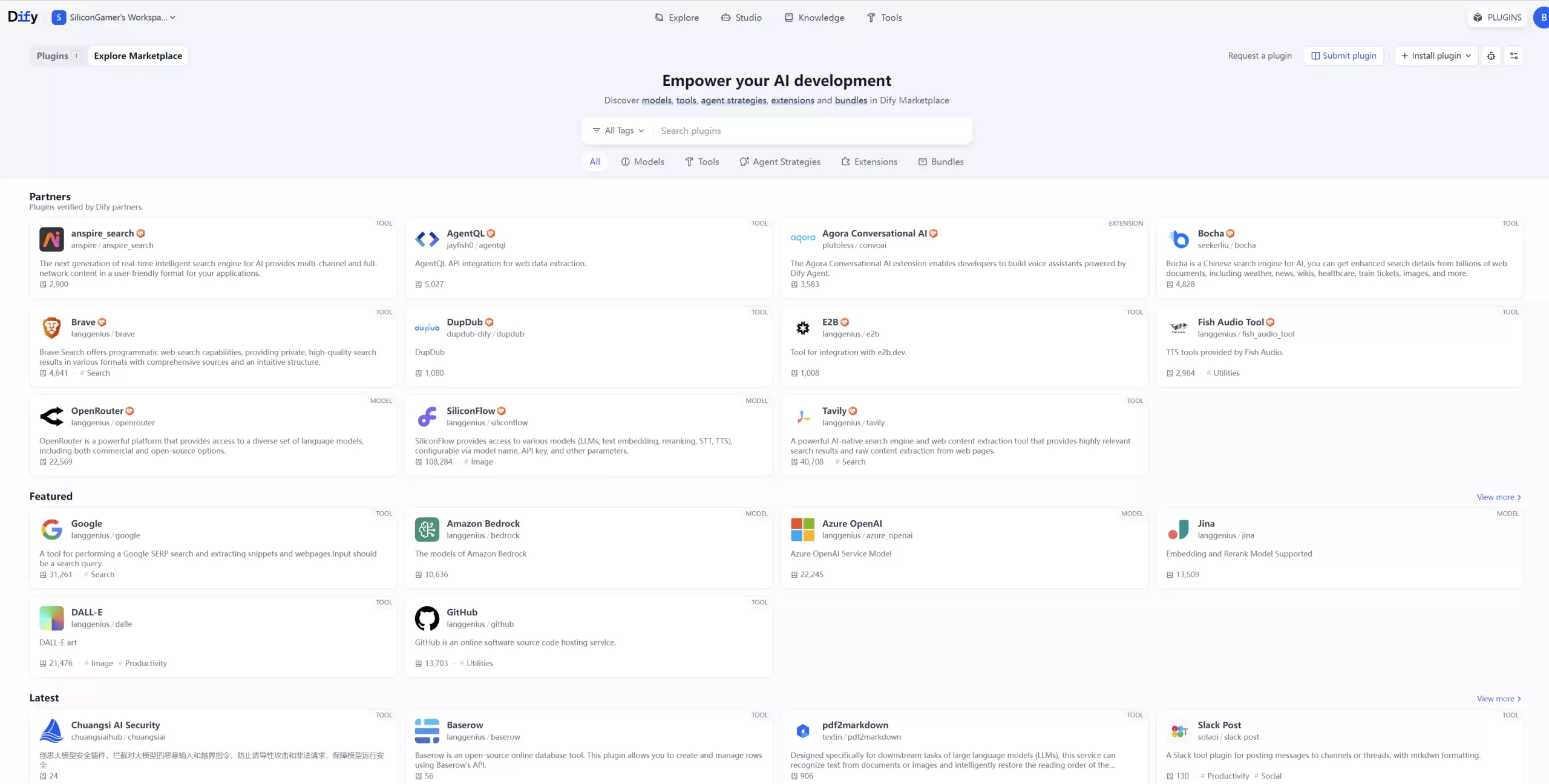

5.8 Rich Plugin System

5.9 Summary

The core value of Dify lies in simplifying LLM application development into a “Lego-like building” process through modular design and visual operation, while providing enterprise-level operational capabilities. Whether it’s a developer quickly validating a prototype or an enterprise deploying a complex AI system, both can be achieved by flexibly combining the above capabilities.

6. Have You Experienced the Difference Between Dify and ChatGPT?

ChatGPT often acts more like a Q&A chatbot. Dify, on the other hand, is more like a toolbox, and a chatbot is just one of the tools in this toolbox.